Introducing an Easy Way to Identify AI-Generated Videos

Since November, Google Gemini AI has had the ability to detect whether an image was created using AI. Building on that capability, Gemini’s chatbot can now also analyze videos to determine if they were produced with AI technologies.

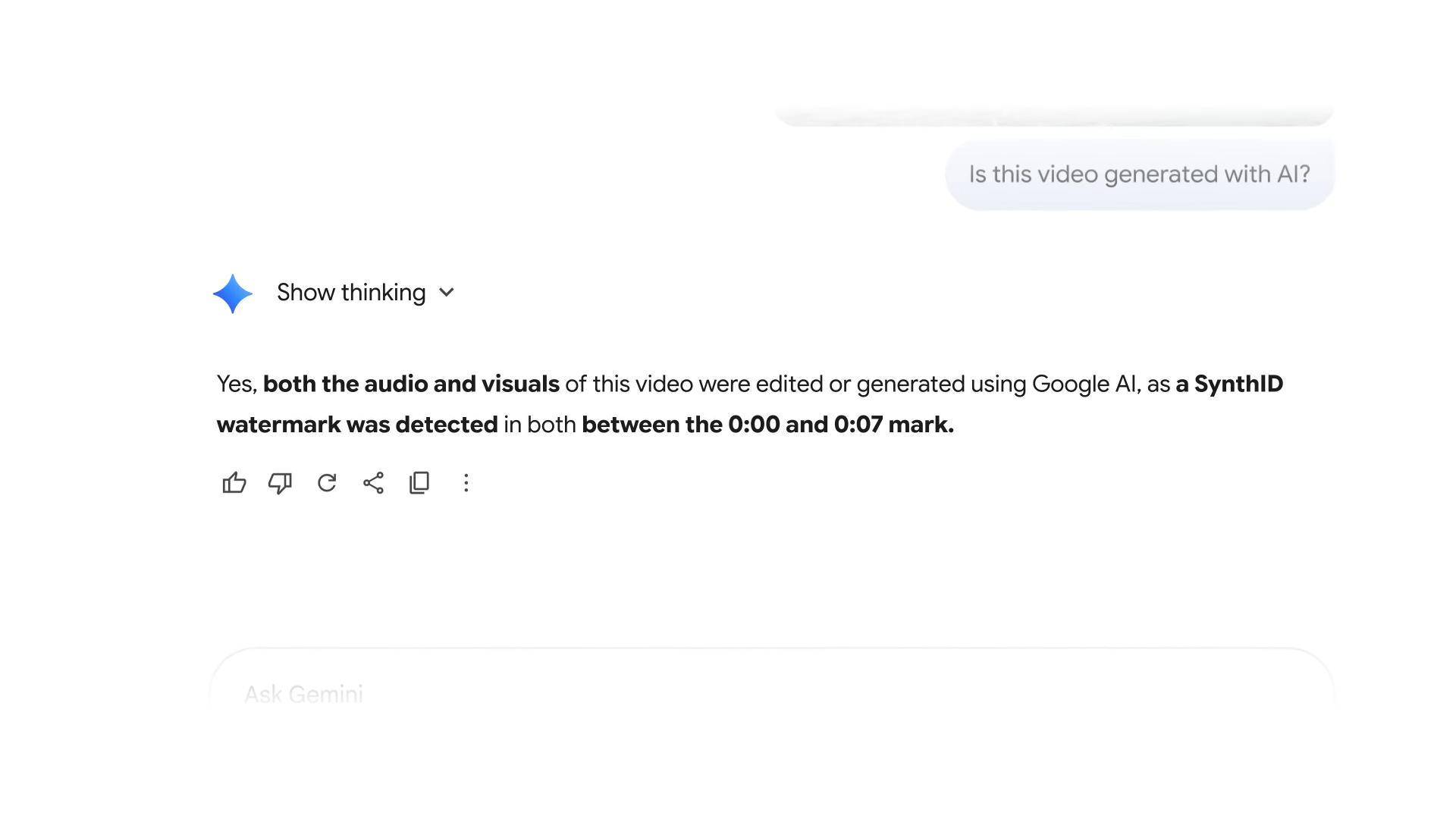

The process for detecting AI-generated videos is just as straightforward as with images. Users can open the Gemini app on their phone or access the service via their computer. After uploading a video they suspect might be AI-generated, simply ask the chatbot a direct question such as, "Is this video generated with AI?"

There are currently some limitations to keep in mind. Gemini's video detection works exclusively with videos created by Google’s own AI tools, similar to its image detection restrictions. Additionally, uploaded videos must be under 100 MB and no longer than 90 seconds.

Once the video is uploaded, the chatbot quickly analyzes both its visuals and audio. It then confirms whether either or both elements were generated by AI. Results also highlight the presence of a SynthID watermark — a key factor in Google's method of identifying AI-created content.

How SynthID Enables Accurate AI Content Detection

Google Gemini AI video detector. | Image by Google

Generative AI can create various types of content such as images, videos, and text, which can sometimes be difficult to distinguish from genuine human-made content. To address this, Google implements SynthID technology that embeds a digital watermark into all media created using Google AI tools. This watermark is invisible to human eyes but detectable by Google's SynthID Detector.

When you ask Gemini to evaluate whether an image or video is AI-generated, it uses the SynthID Detector to search for this watermark. If found, the system responds that AI-generated content was detected. If no watermark exists, you’ll receive a message stating "No SynthID detected," implying that no signs of AI generation have been identified.

It is important to note that the SynthID watermark is only embedded in materials created or modified with Google’s AI tools. Consequently, content made with other AI platforms such as ChatGPT cannot currently be verified by Gemini’s detection system.

To broaden detection across various AI tools, an industry coalition named the Coalition for Content Provenance and Authenticity (C2PA) is developing a universal standard for metadata to label AI-generated content. Although progress is ongoing, many AI applications have yet to implement this standard. Recently, Google confirmed that images generated by Nano Banana Pro include C2PA metadata as part of this effort.

Identifying AI-Generated Images and Videos: What Methods Do You Use?

Why AI Detection Tools Are More Crucial Than Ever

With AI technology advancing rapidly, distinguishing AI-generated content from real media is becoming increasingly challenging. As someone deeply involved with technology and AI since its early days—with experiences dating back to simpler models like ModelScope—I can often tell when content is AI-generated. However, many people, including older generations such as my parents, find this much harder.

Just recently, my 55-year-old father believed a digitally fabricated image of the broken Carlisle Bridge in Lancaster was genuine before revealing it was AI-generated. Tools like AI video and image detectors can empower everyone to better recognize synthetic media. I also hope the swift adoption of C2PA metadata sets a universal standard, making AI-generated content easily identifiable by all detection tools.